How do I prove the impact of my programming?

This is the age-old question in training, facilitation, consulting, and coaching – how do we do it?

How do we show our clients the work we’re doing in having a tangible, measurable, impact on things that matter to them?

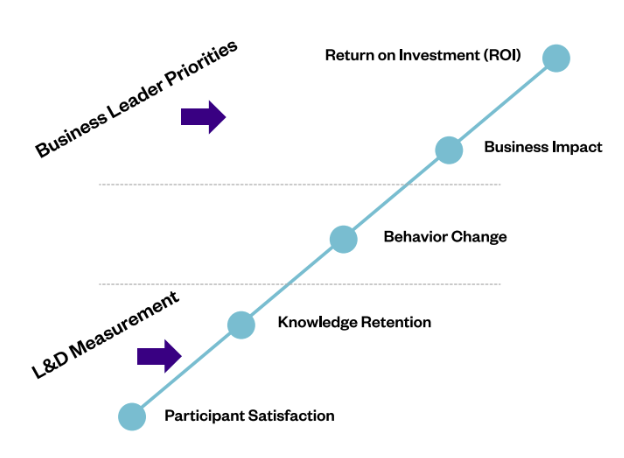

Let’s take a look at the well-known Kirkpatrick-Phillips model.

In case you’re unfamiliar with it, it’s essentially a model for measuring ROI to differing degrees.

As a point of note, not everyone loves this model. There are lots of holes but, if you can embrace the logic – regardless of the specifics – it will help you move into the headspace and the methodology around how you can prove the impact of your programming to your clients.

There are five layers to the Kirkpatrick-Phillips model.

The first level from a measurement standpoint is around participant satisfaction. This is the obligatory smile sheet that covers questions like; did people like the session? Did they enjoy the facilitator? Did they like the sandwiches? (in the case where you’re running a live session).

The second level is around knowledge retention. This is the survey provided to participants three, six, or nine months after the session to see how much they remember from the learning event.

The third level is around behavior change. This level attempts to answer the question are participants putting the concepts into practice and realizing personal change?

The fourth level is around business impact. This is where we want to know if there is a way – and how can we show – the training session or learning event had an impact on something that is being measured internally as a KPI?

Finally, the fifth level is the holy grail of return on investment (ROI). This is where we want to be able to state, with conviction, that the $3 spent here, generated $10 here.

This is the direct financial correlation between the money that was spent on the intervention and, the business outcome as it’s measured on the P&L, balance sheet, or asset ledger.

At Actionable we spent a lot of time trying to understand how to go from the lower levels to the upper fourth or fifth level.

What we found was that there is too much time and too many external factors in between these various levels so it’s not realistic to make the leap directly from what participants learn, to proving true behavior change that drives ROI.

The opportunity is actually in step laddering this.

It’s looking at each level and understanding how we can measure that and then connect it to the next.

For example, we can measure if participants enjoyed the session through the smile sheet.

We can implement the survey a few months later to understand how much knowledge was retained at that point.

We can link the two to demonstrate that those who enjoyed the session actually had stronger retention rates.

However, beyond those two levels and the linking of those two levels is where 92% of corporate training programs stop their measurement.

There is a missed opportunity because it’s not as challenging as you may think to go up a layer and measure behavior change – to give participants the tools to self-assess and then build in some 360 assessment to answer the question, “are behaviors changing?”.

If someone participated in the session we can ask if they are putting those concepts into practice? If so, to what degree?

Answers to these questions (and more) are the building blocks of a regular set of data points around behavior change that then gives us the ability to much more easily link to business impact through two lenses.

One: we end up with a lot more data points around the efficacy of the training session as it relates to business impact rather than just having one snapshot in time around knowledge retention.

For example, in our platform over one month, we see on average 6.4 data points per participant.

So if you’re able to look at those data points and begin to draw conclusions such as what behavior is shifting, and at what level it becomes quite easy to then link that to business impact because you can show at various levels – or however you choose to slice the data – that this group is realizing a specific behavior change.

Let’s use an example to illustrate this point.

Let’s say participants are enrolled in a training designed to make them more empathetic listeners. Why does that matter?

Because empathetic listening leads to greater psychological safety. Why does that matter?

Because psychological safety leads to greater employee retention and now the business impact around employee retention can be measured backwards in time to the behavior changes that leaders engaged in when they participated in your leadership development program.

When you have the data around the behavior change realized by participants, and then you’re able to see three or six months later there was a noticeable drop in voluntary turnover then, by extension, you are one step away from ROI.

The link between ROI and business impact is already done in most corporations so if we can get to the behavior change level with enough data points, it’s fairly easy to get to the business impact level.

This is the basis of everything Actionable is designed to do from a reporting standpoint.

It’s not just around showing behavior change – although if you were to just stay at that level you’re already ahead of 92% of the other programs being run out there.

If you differentiate yourself just by moving to behavior change and you have the ability to organize that behavior change data in a way that makes it easy for your client to think back to business impact – you have just harnessed the power of utilizing Actionable’s technology, methodology and conversations to sustain your learning impact with your clients. This is how you prove the business impact of the programs you are delivering.

If you’re curious to learn more about this, I’d love to chat. You can book a call here and either myself or one of my colleagues will be happy to jump on a call with you to understand your programs in more detail, fill you in on where Actionable would fit within your already existing program structure, show you sample reports and help you understand if it makes sense for you to utilize Actionable in your practice.

If you’re in this headspace of wanting to prove impact you are ahead of the curve, but the curve is moving quickly. I’d love to explore how we can help.